How to submit bug reports

Bugs are an unfortunate part of being a software developer. Sooner or later you’re going to bump into one and need to ask the vendor for help in dealing with the bug, whether there is a workaround, or a fix, or can you fix this bug?

In this article, I’m going to explain how to submit bug reports to Software Verify so that you can get the best outcome.

Prerequisites

As an experienced software developer, you know that bugs require three things for them to be investigated successfully:

- Data

- Good communication

- Cooperation

Investigating bugs within a data vacuum is impossible.

Thus, if we ask you for data and you refuse to provide the data, the bug will not be investigated because of the lack of data.

Also, if we ask you for data, but you take it upon yourself to deliberately provide the data in a different format so that you can edit the data to censor it before providing it to us, there is a good chance you will prevent us from successfully investigating the bug. See the section Bad Communication.

Description

Your bug report should include a detailed description of the bug, plus any supporting data in the form of text or images or log files etc.

The more detailed the description, the better.

Bad bug report:

“Help, your software doesn’t work”.

Amazingly, from time to time, we really do get bug reports like that. What puzzles me is how anyone, let alone an experienced developer thinks that’s a useful bug report.

Good bug report:

“Hi, I’m using Memory Validator 9.82 on Windows 11 and when I try to monitor a 64 bit native service it fails with this error, see attached”. Do you know why this is failing?”

They’ve explained which tool and version they are using and which operating system. They’ve told me they are working with a 64 bit service that uses native code only (no .Net) and they included a screenshot of the error.

This is great. We can examine the screenshot, formulate some hypotheses and provide potential solutions or ask additional questions to clarify the bug.

Data

What sort of data is useful?

-

- Operating System. Microsoft Windows 10

- Software Product and Version. Memory Validator 9.46

- Name of crashing application. This will typically indicate one our software tools or the software that is being monitored by one of our tools.

Plus one or more of the following.

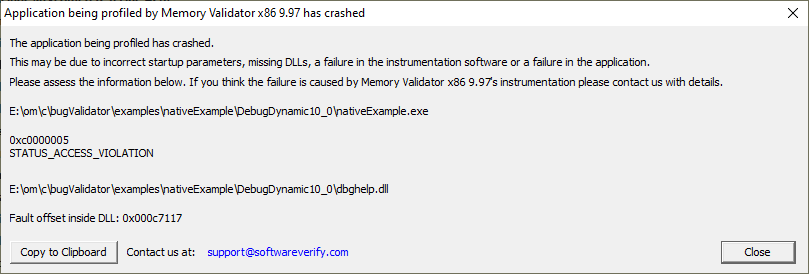

- Event Log Crash. If the tool you are using showed you an event log failure report AND the crashing module is a Software Verify DLL (svl*.dll), send us that information. If you dismissed that information before you could make a copy of it you can find that information again using Event Log Crash Browser. Just look for the application that crashed and the appropriate timestamp, most likely the most recent one. Times are displayed without taking the timezone into account.

Unfortunately the event log crash reports only provide a crash address, but no callstack.

If the crash is not in a Software Verify DLL the report doesn’t help us understand the crash. If that’s the case you’ll need to supply one of the following items of crash data.

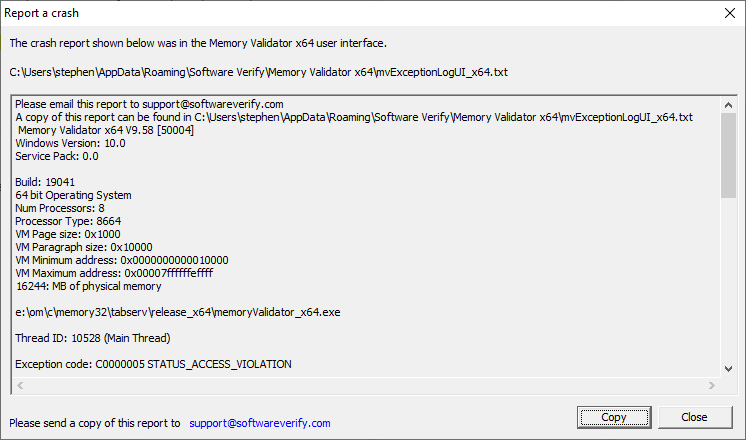

- Exception Report. If the tool you are using showed you an exception crash dialog, send us the contents (copy to clipboard). The various exception reports are stored on disk.

- Crash callstack. If the bug is repeatable, you can collect the crash callstack using Visual Studio, WinDbg or your favourite debugger. This article shows you what data to collect and how to do it.

- Crash minidump. You can use Visual Studio, WinDbg and Exception Tracer to provide a minidump of a crashed application.

- Session file plus instructions for reproduction. Session files contain lots of information about the target application being monitored. They do not contain source code. If you send us a session file we can load it and often find the information to diagnose a bug (or rule out numerous possible causes of the bug) very quickly. Some bugs are diagnosed within minutes when we are given a session file.

You can save a session file from the File menu.

If you are reporting a crash in your software, a Session file may help us, but please ensure you send us an exception report, a callstack, or a crash minidump, as described above.

- Settings file so that we can see the settings you are using. If you send us a settings file we can load it and often find the information to diagnose a bug (or rule out numerous possible causes of the bug) very quickly. Some bugs are diagnosed within minutes when we are given a settings file.

You can save a settings file from the Settings menu.

- Environment variables values so that we can see what values are in place. We’ll ask you for specific env var values if we need them.

- Log Files. We have a variety of utilities that can enable many different styles of data logging. If we think this is a good idea for your bug we’ll give you instructions to create a particular log file, then we’ll investigate the log when you provide it to us.

- Test Application plus instructions for reproduction. Depending on the bug, it may be necessary to provide debug information. The test application can be:

- A demonstration application. A barebones application that demonstrates the problem. This could be as simple as “Hello World!” with some extra code. This is typically provided as source code and project files so that we can build it, and sometimes modify it to make it easier to debug the problem.

- The application you are working on. This could be the full product version, or the evaluation version that is provided to prospective customers. This is typically provided in whatever form is appropriate to distribute your application to us for testing.

If you’re not sure what to provide, just provide what you think will help.

Do not try to provide everything in this list.

If we need something else, we’ll ask for it.

Data that we don’t want

Please do not send us the source code for your application.

We don’t want to see your source code unless it is for a demonstration program, “Hello World!” etc.

Exception: In very rare situations, we may request access to an individual named file or function because the bug is related to our C++ parsing functionality. We have a C/C++ mangler that preserves the code layout but makes the code unreadable (and is non-reversible) that you can use prior to supplying files to us.

Communication

Submitting a bug report usually results in a bit of back and forth between us and the person/team submitting the bug report.

Cooperation and good communication is key in this exchange. Failure to cooperate combined with bad communication leads to nothing happening.

Good communication

Good communication leads to better outcomes, faster.

A key part of good communication is answering all questions on a timely basis, even if the answer is No.

“Yes, we can do that” helps because it means we can move along the proposed path of action.

“Sorry, we can’t do that, but we can do this” helps because it means we can explore alternative courses of action.

“No, we can’t do that, because of XXX” helps because it means we understand there is a problem (XXX is often defence/security related, or sometimes overly cautious lawyers, or perhaps the software engineer you’re communicating with doesn’t have the authority to say Yes).

Knowing there is a problem means we can explore alternative courses of action. Overly cautious lawyers can often be defused with the application of an NDA (non-disclosure agreement). Defence/security related issues – we understand. Some of our staff have worked on defence related projects prior to working at Software Verify.

Example 1

Bug “Hi, I’m trying to track down a bug using Event Log Crash Browser, but I’m getting no data. Any ideas?”

SVL “What operating system are you using?”

Bug “Windows 11”.

We ran some tests. The bug was fixed (The event log XML format had changed for Windows 11), and a working version released 2 days later.

The customer had a fix four days after reporting the bug.

Example 2

Bug “During startup of my application using Memory Validator the validator crashes suddenly. Just get a dialog box” (picture of dialog box included).

SVL “1) Is this crash repeatable? 2) When this crash happened, had you previously recorded a session with the same instance of Memory Validator?”

Bug “The crash occurs everytime I start my app and always the same address. The crash also occurred in a previous version from April 2023”

SVL. We asked them to record a data stream for us using a special utility designed for this type of bug. They recorded two data streams, both over 400MB in size.

We spent two days playing these data streams back hoping to reproduce the crash. One of the data streams did cause the crash. We eventually tracked the crash to an obscure bit of code that hadn’t been touched in years, but which was causing a very subtle memory corruption that was now coming to light because the multi-threaded nature of Memory Validator’s internals had changed. This bug would never have been found without either the real application to test, or a data stream recorded from that application.

The customer had a fix two days after reporting the bug.

We can’t promise turn around times as rapid as these, but these examples do show what cooperation and good communication can enable.

Bad communication

Examples of bad communication:

- Ignoring questions. If you don’t reply telling us why you can’t help with that question we can’t move forward investigating the bug.

- Failing to supply the requested data. If you don’t reply telling us why you can’t supply the data we can’t move forward investigating the bug.

- Supplying the requested data in a different format than was requested. You don’t know why the data was requested in the format it was requested in. By providing it in a different format you reduce the scope of our investigation of that data, and may prevent us from correctly determining the cause of the bug you are reporting.

- Supplying the requested data in text format, and editing it prior to providing it. You don’t know what data we are looking for. By editing the data you may be destroying the information we are looking for that will help us solve your bug.

- Refusing to supply a test application that demonstrates the bug, claiming that we (Software Verify) can simply reproduce the bug by creating an application that uses technologies A, B and C. Firstly, most bugs are not so easily reproduced. We’ve been doing this for 22 years and know that a customer supplied demonstration application is going to demonstrate the bug correctly, whereas an application that we create will not necessarily create the bug correctly (different compiler, different libraries, different compiler settings, different linker settings, not to mention different source code), and if it does demonstrate a bug, it may not be the bug you are reporting. Suggesting this option indicates naivety in understanding the problem space.

There are important, possibly unintended, negative side effects caused by failing to answer questions and/or failing to supply data.

It appears aloof and arrogant.

You’re also wasting the time of the support staff you are requesting help from. Do not be surprised when this is not received well.

Example 1

A customer approached us with a stack buffer overrun bug deep inside Microsoft’s clr.dll when profiling a mixed mode application, with the originating code coming from the .Net Profiler enter/leave stubs via Memory Validator’s BSTR monitoring code. Requests to test the faulting application were ignored. The particular bug requires repeated tests to be run against the faulting application until we can identify settings in Memory Validator that would cause the failure. Our own tests with mixed mode applications indicate there are no problems. This is an application specific (or possibly machine specific) failure and cannot be debugged without access to the faulting application.

This bug remains unfixed because we have no information to work with.

Example 2

A customer reported problems with symbol servers. We requested they send us the session file for the session where some symbols were not downloaded. We also requested the settings file and data for one environment variable. These requests were ignored but a text dump of the diagnostic tab was provided. The text dump had been edited, deleting all symbol server paths from the data. They did this even though they were asking us to look into a symbol server related bug. The editing was so clumsy they deleted important non-symbol-path related information. Although part of the cause of the failure was in the information provided, it was impossible to diagnose the cause of the bug from the information provided.

The really sad thing about this example is the data was present in the session we’d asked for, but they refused to provide it to us.

Three months later, this fixable bug is still unfixed because they won’t share a session file with us. We could probably have had this bug fixed in 2 days if they had cooperated. You can’t help some people, sadly.

Conclusion

You have a choice, you can submit good bug reports and cooperate with good communication, or you can shoot yourself in the foot by refusing to cooperate.

The former course of action benefits four groups of people:

- Your company. You get bug fixes faster.

- Your customers. Your software is more reliable because you can investigate software quality issues that you couldn’t before the bug was fixed.

- Software Verify. We get to resolve bugs in a timely manner, with no open-ended “Wonder what the problem was with the bug?” issues.

- Software Verify customers. A bug fix for one customer benefits all customers.

The latter course of action benefits no one and actively harms the person reporting the bug because they don’t get a bug fix for the bug they reported, ironically because of their own inaction.