The Six Waves of Computing

Last year I was lucky enough to be present in the audience when Hermann Hauser gave the keynote speech for the Discovering Startups talks presented by Cambridge Wireless. Later in the year, during the SVC2C event Hermann Hauser again gave reference to his Waves of Computing idea.

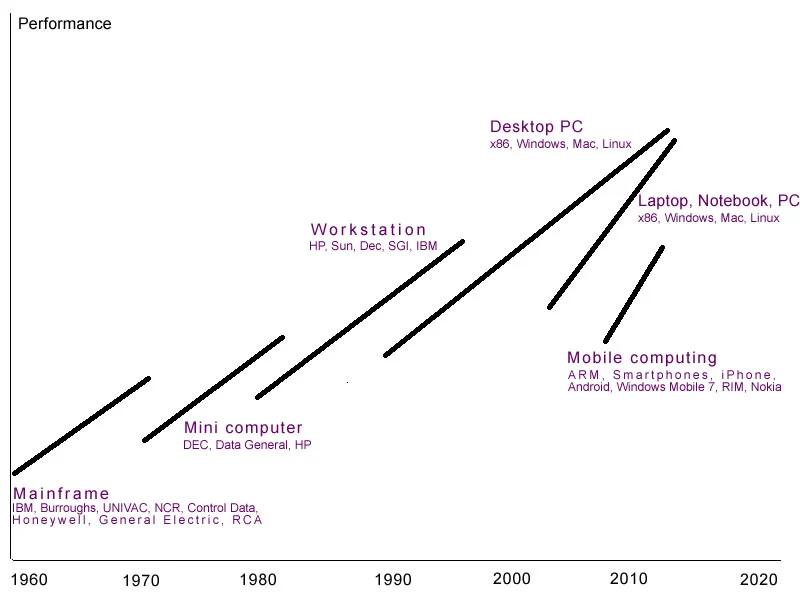

I’m going to cover what I remember of his Waves of Computing idea in this post. I think the basis of the Waves of Computing idea was that there have been five waves of computing. I’ve expanded this to six, as it makes a bit more sense to me. I think you could argue that a few other waves could also be added (analogue and valve computing at the beginning and home computing in the middle).

I’ve recently read some incredible books about innovation (“Innovator’s Dilemma”, “Seeing What’s Next”), disruptive ideas (“Blue Ocean Strategy”) and alternative thinking (“Different”, Youngme Moon). The ideas in these books dovetail nicely with the Waves of Computing idea.

The image is for illustrative purposes only. I’ve made approximate attempts to get date ranges about right, but you can argue about them either way. Likewise, the performance scale is relative. I’m not presenting on any particular performance metric (MIPs, memory, disk size, disk speed, I/O bandwidth etc), just the overall “is the consumer satisfied” metric (which is why the PC and laptop dates start so late compared to the technology, you could have a laptop 15 years earlier than I’ve drawn, but it wasn’t up to much).

What you can see from the graphic is that over time the technology of the day is replaced by a newer technology. Mainframes get replaced by minicomputers. Minicomputers get replaced by workstations. PCs get so powerful they become workstations. Miniturisation allows laptops to go from being luggable (1980s) to portable, powerful and ubiquitous (2000s). Smartphones, introduced by the Apple iPhone in 2007, are starting to make inroads into the laptop market. Not as powerful yet and the software and compatibility issues are yet to be ironed out, but you can see it could happen. I’ve met people with a HTC Desire and when I’ve questioned them about their use of it, their answer is “I do nearly all of my work on this, hardly ever use my PC anymore”. Thats a pretty emphatic statement of where they are going with their usage.

What is implied by the graphic is that after three decades of dominance by the x86 platform and its many variants, during which the x86 killed off nearly every RISC processor and also Motorola’s excellent 68000 platform, the x86 is under attack by what many people would have thought an unlikely attacker: The ARM processor. Its low power (by design, unlike the x86) and its very efficient (I’ve met several people in Cambridge that have told me about their RISC PC that could emulate DOS and still run faster than a real PC). Could it be that if this graph is drawn in 2020, the x86 is on the way out and the ARM is on the ascendent (after a very long wait).

The irony is that Intel is indirectly responsible for the creation of the ARM chip. You’ll need to ask Hermann Hauser about that though. Its a good story.

Smartphones

Imagine if you could take your smartphone home, plug it into a dock and it can then display on a nice high resolution screen, has a keyboard, mouse, external peripherals (printer, DVD player, etc) and the screen is also a touchscreen (OK, so why do I need the mouse?).

All you need for smartphones to replace PCs and Laptops is:

- Universal docking standard to allow smartphone hardware to dock with a keyboard, mouse, high resolution touch screen and external storage.

- Universal software standard so that smartphone software can recognise external hardware and use it when available.

I can see with Apple, the above two conditions will never happen. With Apple, its the ‘i’ way or the highway. Thus we have to look to Android, Microsoft and RIM for this ideal docking solution for a smartphone.

An early attempt at this future is the Atrix 4G (Android) smartphone and dock, available in the USA.

Future

After smartphones, what will be next?

Some people are working on computing embedded in clothing. Others are working on computing embedded inside humans. There is already a miniture sensor that can be embedded in a patient’s eye to monitor eye pressure. But that is hardly personal computing is it? I think for computing embedded in humans you run into a variety of health related issues (cooling, radiation, toxicity of construction, rejection) and form factor issues. For embedding in prosthetics most of these issues go away, so perhaps that is a future for some people.

Looking forward 10 years or more this poses some interesting questions for developers of processors, hardware, operating systems and software applications.

I hope you found the graphic interesting. If you have any comments or think I omitted anything please let me know.